Get in losers, we're moving to Linux!

I've never seen so many developers curious about leaving the Mac and giving Linux a go. Something has really changed in the last few years. Maybe Linux just got better? Maybe powerful mini PCs made it easier? Maybe Apple just fumbled their relationship with developers one too many times? Maybe it's all of it. But whatever the reason, the vibe shift is noticeable.

This is why the future is so hard to predict! People have been joking about "The Year of Linux on the Desktop" since the late 90s. Just like self-driving cars were supposed to be a thing back in 2017. And now, in the year of our Lord 2025, it seems like we're getting both!

I also wouldn't underestimate the cultural influence of a few key people. PewDiePie sharing his journey into Arch and Hyprland with his 110 million followers is important. ThePrimeagen moving to Arch and Hyprland is important. Typecraft teaching beginners how to build an Arch and Hyprland setup from scratch is important (and who I just spoke to about Omarchy). Gabe Newell's Steam Deck being built on Arch and pushing Proton to over 20,000 compatible Linux games is important.

You'll notice a trend here, which is that Arch Linux, a notoriously "difficult" distribution, is at the center of much of this new engagement. Despite the fact that it's been around since 2003! There's nothing new about Arch, but there's something new about the circles of people it's engaging.

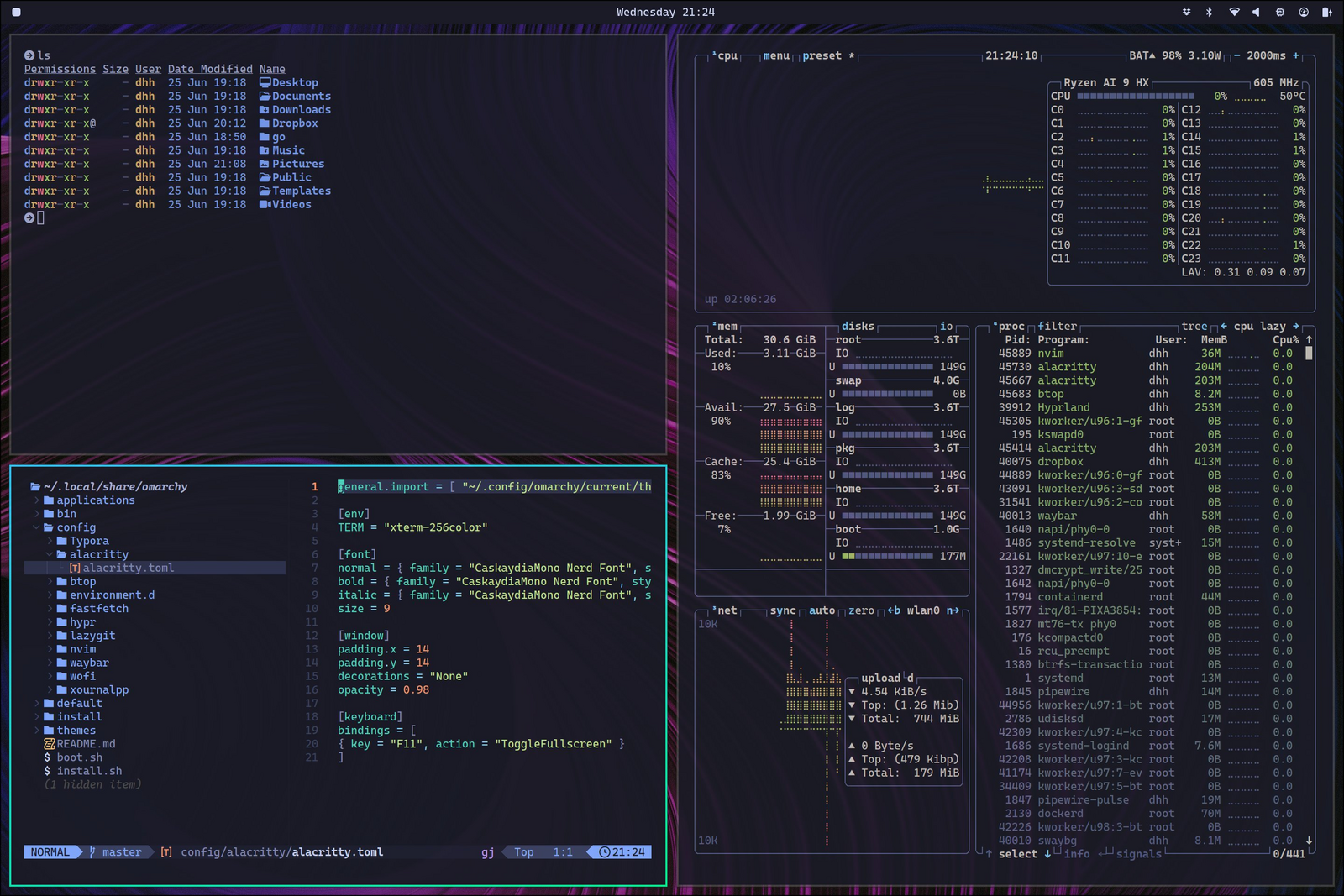

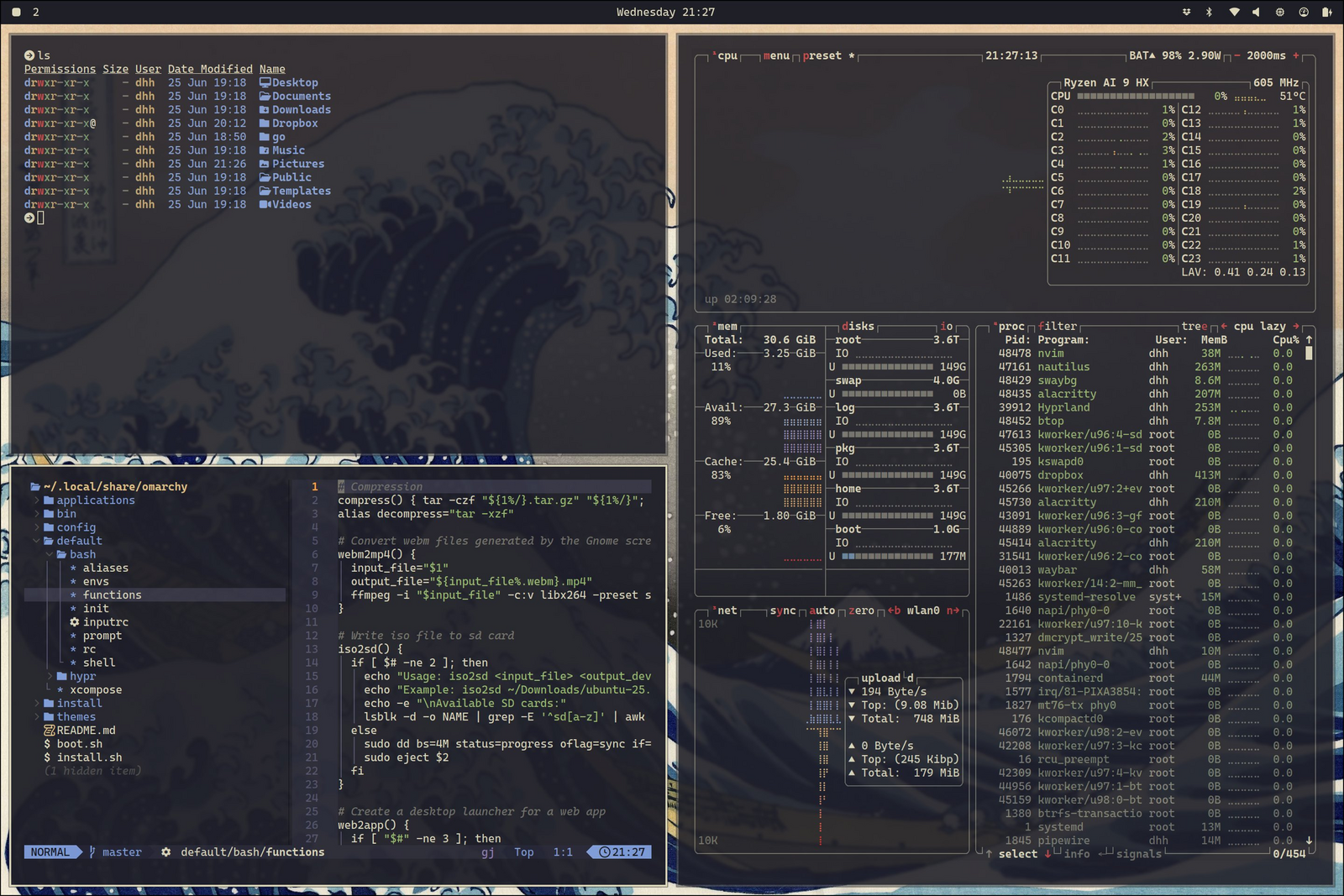

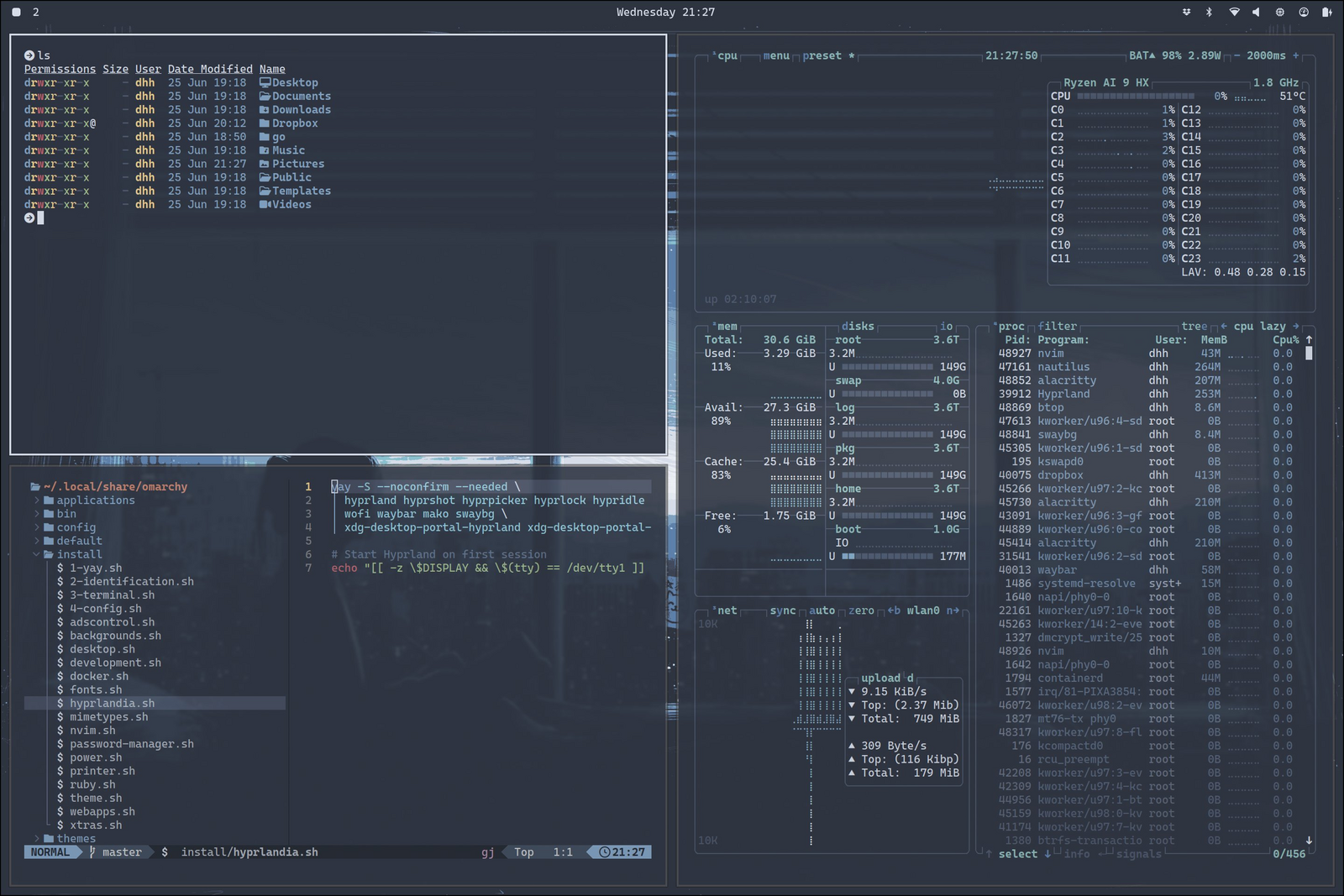

I've put Arch at the center of Omarchy too. Originally just because that was what Hyprland recommended. Then, after living with the wonders of 90,000+ packages on the community-driven AUR package repository, for its own sake. It's really good!

But while Arch (and Hyprland) are having a moment amongst a new crowd, it's also "just" Linux at its core. And Linux really is the star of the show. The perfect, free, and open alternative that was just sitting around waiting for developers to finally have had enough of the commercial offerings from Apple and Microsoft.

Now obviously there's a taste of "new vegan sees vegans everywhere" here. You start talking about Linux, and you'll hear from folks already in the community or those considering the move too. It's easy to confuse what you'd like to be true with what is actually true.

And it's definitely true that Linux is still a niche operating system on the desktop. Even among developers. Apple and Microsoft sit on the lion's share of the market share. But the mind share? They've been losing that fast.

The window is open for a major shift to happen. First gradually, then suddenly. It feels like morning in Linux land!